The Art of Visual Cues: A Simple Yet Powerful Tool for Environment Identification

Are you tired of the confusion and mix-ups that come with managing Power Apps across multiple environments? We have all been there – one minute we are confidently testing a new feature, and the next, we are lost in a world of confusion until we realize we are in the production environment, not our development or testing environment. It can be both surprising, confusing, and disorienting.

But what if there was a way to make our apps and more importantly our users more environmentally aware. Imagine an app that clearly shows whether it is in development, testing, or production. This isn’t just a dream; it is entirely possible, and we are going to explore how. With a combination of environment variables, labels, and color properties, we can transform our apps and adjust their appearance all based on the environment they are in.

In this guide we will go from apps that are indistinguishable between environments.

To apps that are informative and allow us to develop, test, and use our apps with confidence.

Dive in and explore as we start with the basics of environment variables and then move to advanced techniques for dynamic configuration. By the end of the guide, we will not only learn how to do this, but it will become clear as to why this is helpful when managing Power Apps across different environments.

Here is what this guide will cover:

- Understanding Power Apps Environments and Environment Variables

- The Significance of Environmental Awareness in Power Apps

- Building The App

- Adding the Environmental Visual Cues

- Wrapping Up: Ensuring Clarity and Efficiency in Your Power Apps

Understanding Power Apps Environments and Environment Variables

Power Apps is a fantastic and powerful platform that allows us to create custom business applications with ease. However, as our projects and solutions grow, we begin to dive into the realm of application development, and we encounter the need for efficient management. This is precisely where Power Apps environments step in to save the day.

Introduction to Power Apps Environments

Environments act as unique containers helping facilitate and guide us through the entire app development journey. Each environment is a self-contained unit, ensuring that our data, apps, and workflow are neatly compartmentalized and organized. This structure is particularly beneficial when managing multiple projects or collaborating with a team. Environments are like distinct workspaces, each tailored for specific stages of your app development journey.

These environments let use build, test, and deploy our applications with precision and control, ensuring that chaos never gets in the way of our creativity while crafting our Power Apps applications.

The Role of Different Environments

Let’s shed some light on the roles played by different environments. Environments can be used to target different audiences or for different purposes such as development, testing, and production. Here we will focus on staged environments (dev/test/prod) an environment strategy that helps ensure that changes during development do not break the app users’ access in our production environment.

First up is the development environment – the birthplace of our app ideas. It is where we sketch out our vision, experiment with various features and lay the foundation for our apps.

Next is the testing or QA environment which takes on the role of the quality assurance center. In this environment we examine our app, validate its functionality and user experience, and ensure everything works seamlessly and as expected before it reaches our final end users.

Lastly, we have our production environment, where our apps go live. It is the real-world stage where our apps become accessible to its intended users, interacts with live data, and requires stability and reliability.

The Importance of Power Apps ALM

We cannot get too far into exploring Power Apps environments, and environment strategies without mentioning Application Lifecycle Management (ALM). ALM is a pivotal aspect of successful software development, and our Power Apps are no exception. ALM within Power Apps helps ensure a smooth transition between the development, testing, and production phases of our projects. It encompasses maintaining version control, preventing disruptions, and streamlining the deployment process.

If you are curious to learn more about Power Apps ALM, I encourage you to visit the post below that discusses its different aspects including how to implement ALM, where Power Apps solutions fit into the picture, version control, change management, and much more.

Streamline Application Lifecycle Management with Power Platform Solutions

Explore how ALM can enhance collaboration, improve performance, and streamline development in Power Platform solution.

The Significance of Environmental Awareness in Power Apps

Common Challenges in Multi-Environment Scenarios

Navigating through multiple environments in Power Apps can sometimes feel like a tightrope walk. One common challenge is keeping track of which environment we are working in and what environment app we are accessing in our browser. It can be easy to lose track especially when we are deep in development and testing.

Image a scenario where we created a new feature in development, and as the developer we wish to verify this change before deploying the update to our testing environment. But as often happens, something comes up and we cannot check the functionality right away. When we come back later, we launch the app using the web link url, and we don’t see our expected update. Was it an issue how we developed the change, are we viewing the development app, are we viewing the testing app? All can lead us to more questions and confusion, that could be solved if our app clearly indicates the environment it resides in no matter how we access the ap.

Importance of Identifying the Current Environment

Informing us and other app users, especially those that may use the app across different environments, about the current environment is focused on providing control and safety. Being aware of our environment ensures that we are in the correct environment to carry out our tasks.

Identifying the environment is crucial for effective testing of our apps. By knowing we are in the right testing environment, we can experiment and troubleshoot without the fear of affecting the live application or data.

Moreover, it aids in communication within our teams. When everyone is on the same page about the environment they should be working in for specific tasks, collaboration becomes smoother, and we can minimize the chances of errors. The goal is to create a shared understanding and a common approach among team members.

Building The App

Setting the Stage

For our app we will be using a SharePoint List as the data source. Each environment will use its own specific list, so we have 3 different lists that are on different SharePoint sites. Once the lists are created, we can begin building our solution and app.

The first step to creating our Power App that we can easily move between environments is creating a solution to contain our app. In addition to the canvas app the solution will also contain the various other components we require, including a Power Automate workflow and environment variables.

To do this we navigate to our development environment and then select Solutions in the left-hand menu. On the top menu select New solution and provide the solution a name and specify the publisher. For additional details visit this article.

Create a solution in Power Apps

Learn how to create a solution in Power Apps

After creating our solution, we will first create our environment variables that will define the app’s data source. Since the SharePoint site and list will be changing as we progress our app from development to test to production, we will create two environment variables. The first to specify the SharePoint Site, and the second to specify the SharePoint List on the site. For details on environment variables and how they can be modified when importing a solution to another environment visit this article.

Use environmental variables in solution – Power Apps

Use environment variables to migrate application configuration data in solutions

Here we will manually create the environment variables, so we have control over naming, but there is also the option to automatically create the environment variables from within our app. In the canvas app editor on the top menu select Settings. Within the General section locate the Automatically create environment variables when adding data sources, and toggle to the desired value.

To manually add environment variables to our solution, select New on the top menu, under more we will find Environment variables. Provide the environment variable a name and under Data Type select Data source. In the connector drop down select SharePoint and a valid SharePoint connection in the Connection drop down. For the first variable under Parameter Type select Site and then New site value and select the required SharePoint site or provide the site url. For the second environment variable select List for the Parameter Type, then for the Site select the newly created environment variable, and then New list value, and select the required SharePoint List.

Creating the App in the Development Environment

Our app starts to come to life in our development environment. First, we focus on creating the app’s core features and functionalities. This is where our creativity and technical skills come into play. We can experiment with different designs, workflows, and integrations, all within the safe confines of the development environment. Its a bit like being in a laboratory, where we can test hypotheses and make discoveries without worrying about breaking the version of the app our end user might be actively using.

First, we will connect to our data source using our environment variables. In the left side menu select the data menu, then add new data, and search for SharePoint. After selecting the SharePoint data source and connection, in the Connect to a SharePoint site pane select the Advanced tab and select the environment variable we created previously, and then do the same to select the list environment variable.

If we opted to not create the environment variables first, and ensured the automatically create environment variables when adding data source setting is turned on, we can provide a site URL and select a list. We will then be prompted that an environment variable will be automatically generated to store information about this data source.

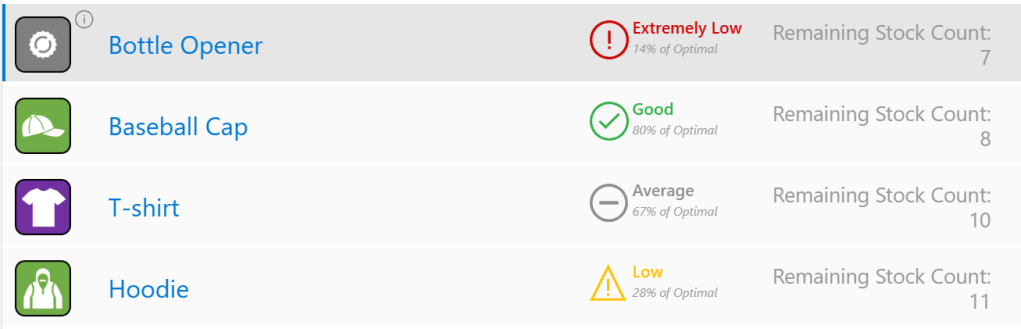

Once connected to our data source we will build out the basic functionality of our app. For this simplified app this includes a vertical navigation component and a gallery element to display the list items. Here is the base app that will be our launching point to build a more informative and dynamic app.

Extracting Environment Information

As we deploy our app to different environments, we will update the SharePoint site and list environment variables. Since the values of these environment variables will be distinct and specific to the environment, we can leverage this to help determine and show what environment the app is in.

Now, if we search the data sources that we can add to our app for “environment”, we will find an environment variable values dataverse source. The data source can be used to extract the values of our environment variables however, this will give our app a Premium licenses designation. Premium licensing may not always be suitable or available, so we will explore an alternative method, using a Power Automate workflow and our App.OnStart property.

Building the Power Automate Workflow

In the left side menu, select the Power Automate menu option, then create new workflow.

To extract and return the information we need to our app we will create a simple flow, consisting only of the trigger and the Respond to a PowerApp or flow action. Selecting the Create new flow button will create a Power Automate workflow with a PowerApps (V2) trigger. For this workflow we will not need to add any inputs to this trigger action.

In the workflow designer, select New action and search for Respond to a PowerApp or flow, and add the action to the workflow. Here we will add two outputs, the first to return the value of the SharePoint site environment variable and the second to return the value of the SharePoint list environment variable. Depending on our requirements, we may only need one of these values to determine the appropriate environment information. For the output value we can find our environment variables listed in the dynamic content.

The final workflow is shown below. Once created give the workflow an informative name, then save and close the workflow.

Call the Workflow and Store the Outputs

On the start of our app, we will run our workflow and store the output values, which are the values of our environment variables, in a global variable within our app scope. We do this by using the App.OnStart property and setting a variable to store the outputs. We will add the following to the App.OnStart property.

Set(gblEnvironmentDetails, .Run())

Here, we create the gblEnvironmentDetails global variable which will store the outputs of our workflow. This variable has a record data type with values for both our sourcesiteurl and sourcelistid outputs.

The App.OnStart event, becomes crucial as it sets the stage for the entire app session. Now, each time our app starts are workflow will return the environment values we require ensuring this information is always available from the moment our app is launched.

We can visual these values, and how they change between our environments by adding labels to our app. We will add various environment detail labels. The first we will add will display out SharePoint site environment variable value, set the text property of the label to the following.

"SharePoint Site Environment Variable: " & gblEnvironmentDetails.sourcesiteurl

We will make this value a bit easier to work with and evaluate, by extracting the site name from the site url. We add another label to display the site name, and set the text property of the label to the following.

"SharePoint Site : " & Last(Split(gblEnvironmentDetails.sourcesiteurl, "/sites/")).Value

This expression splits the site url by the text “/sites/” and then returns all the text that follows it which is the site name.

Lastly, we add a text label to display the value stored in our SharePoint List environment variable by adding a new label and setting the text property to the following.

"SharePoint List Id : " & gblEnvironmentDetails.sourcelistid

Adding the Environmental Visual Cues

To make the environment distinction clear we will add environment-specific colors and text labels in our app’s design.

Adding Text Labels

We will start by adding an environment label in the header, that will be placed opposite of our app name. To do this we first create a named formula and then use this to set the text property of the new label in our header element. In the App.Formulas property add the following.

nfmEnvironment = Switch(

Last(Split(gblEnvironmentDetails.sourcesiteurl, "/sites/")).Value,

"PowerAppsDevSource", "DEV",

"PowerAppsTestSource", "TEST",

"SalesandMarketing", "PROD",

"UNKNOWN"

);

This expression creates a new named formula nfmEnvironment and we use the switch function to evaluate the site name of our SharePoint site environment variable using the same formula we used above and return our environment label. For our app if our SharePoint site environment variable is for the Power Apps Dev Source site the named formula nfmEnvironment will return a value of DEV, when set to Power Apps Test Source it will return TEST and when set to our Sales and Marketing site it will return PROD. The formula also includes a default value of UNKNKOWN, if none of the above conditions are true, this will help identify a potential error or our app data source set to an unexpected site.

We then add a new label to our header element and set the text property to nfmEnvironment. Additionally, the navigational component used in our app has a text input to display the environment label near the bottom under the user profile image. We will set the input value also to nfmEnvironment.

Environmental Specific Color Themes

Next, we will elevate our awareness when working with our apps across different environments by moving beyond just labels. We will now leverage different visual cues and color themes between the different environments. In our development environment the navigation component and header will be green, when in our testing environment these elements will be gray, and finally in production they will be blue.

The first step to include this functionality is to define our color theme that we can then use to set the color of our different elements depending on the value of nfmEnvironment. To create our color theme, we will create a new named formula. In the App.Formulas property we will add the following to create a named formula containing the basics of a color theme used within our app.

nfmThemeColor =

{

primary: "#004F6B",

onPrimary: "#FFFFFF",

primaryFixed: "#9DC8EB",

onPrimaryFixed: "#004D67",

secondary: "#005C3F",

onSecondary: "#FFFFFF",

secondaryFixed: "#78CDA7",

onSecondaryFixed: "#005138",

tertiary: "#394C57",

onTertiary: "#FFFFFF",

tertiaryFixed: "#D0DDE4",

onTertiaryFixed: "#364954",

background: "#F6FAFE",

onBackground: "#171C1F",

surface: "#F6FAFE",

onSurface: "#171C1F",

surfaceContainerLowest: "#FFFFFF",

surfaceContainerLow: "#F0F4F8",

surfaceContainer: "#EAEEF2",

surfaceContainerHigh: "#E5E9ED",

surfaceContainerHighest: "#DFE3E7"

};

This named formula now stores our different color values and we can use it and our nfmEnvironment formula to dynamically color our apps elements.

We will start with setting the fill of the header. The header is a responsive horizontal container containing our two text labels. The fill property of the container we will set to the following expression.

Switch(

nfmEnvironment,

"DEV", ColorValue(nfmThemeColor.secondary),

"TEST", ColorValue(nfmThemeColor.tertiary),

"PROD", ColorValue(nfmThemeColor.primary),

Color.Black

)

Adding the color cues will following a similar pattern that we used to set the environment label text property. We use the Switch function to evaluate our nfmEnvironment value and depending on the value set the color to the secondary color (green), tertiary color (gray), primary color (blue), or if a condition is not met the header will be set to black.

We then use the same expression for the vertical selection indicator bar and next arrow icon in our app’s gallery element. Next we incorporate the same expression pattern to color the different aspects of the navigation element using the expressions below.

//Navigation Background

Switch(

nfmEnvironment,

"DEV", ColorValue(nfmThemeColor.secondaryFixed),

"TEST", ColorValue(nfmThemeColor.tertiaryFixed),

"PROD", ColorValue(nfmThemeColor.primaryFixed),

Color.Black

)

//Selected Item Background

Switch(

nfmEnvironment,

"DEV", ColorValue(nfmThemeColor.onSecondaryFixed),

"TEST", ColorValue(nfmThemeColor.onPrimaryFixed),

"PROD", ColorValue(nfmThemeColor.onTertiaryFixed),

Color.Black

)

//Base Icon Color

Switch(

nfmEnvironment,

"DEV", ColorValue(nfmThemeColor.onSecondaryFixed),

"TEST", ColorValue(nfmThemeColor.onPrimaryFixed),

"PROD", ColorValue(nfmThemeColor.onTertiaryFixed),

Color.Black

)

After adding the dynamic color themes to our navigation our environment aware app is complete.

We now have a clear and informative app that instantly informs users about the app’s current environment. Using text labels and visual cues are simple yet effective ways to avoid confusion and ensure that everyone knows which version of the app they are interacting with.

Deploying to Different Environments

Now that our app is complete, we save and publish our version of the app and begin the process of deploying the app to the testing environment. First, we will edit each environment variables within out solution and remove the current site and current list from our solution. This will help ensure the values we set for these variables in our development environment don’t carry with our solution when we import it to different environments.

Then export the solution and download the exported .zip file. Next, we switch to our test environment, and import the solution. During the import process we are prompted to set our two environment variables, which help set our dynamic and environment specific visual cues in our app. We set the values and finish importing the app to our test environment to view our app in the test environment.

We can then also repeat the process, to see our app in the production environment.

Wrapping Up: Ensuring Clarity and Efficiency in Your Power Apps

As we wrap up our exploration of visually distinguishing environments in Power Apps, remember that the key to a successful app lies in its clarity and user-friendliness. By implement the techniques we have discussed, from color-coding elements of our app and labeling to using dynamic UI elements, we can significantly enhance the user experience. These strategies not only prevent confusion but also streamline our workflow across development, testing, and production environments. When we embrace these tips, we can make our Power Apps intuitive and efficient, ensuring that users always know exactly where they are and what they are working with.

Thank you for reading! Stay curious, and until next time, happy learning.

And, remember, as Albert Einstein once said, “Anyone who has never made a mistake has never tried anything new.” So, don’t be afraid of making mistakes, practice makes perfect. Continuously experiment, explore, and challenge yourself with real-world scenarios.

If this sparked your curiosity, keep that spark alive and check back frequently. Better yet, be sure not to miss a post by subscribing! With each new post comes an opportunity to learn something new.